Our two-part blog post published at the end of 2017 explored the Amazon Alexa APIs and their applicability for in-vehicle use. At CES 2018, Amazon announced Alexa extensions specifically for vehicles. This blog provides an overview of the newly announced features, based on information provided at the Alexa CES conference and through subsequent public statements.

Alexa Automotive Core

The new automotive extension to the Alexa Voice Services is called Alexa Automotive Core (AAC). AAC is an SDK that allows OEMs to integrate Alexa in their vehicles and provide in-vehicle specific commands in addition to the standard Alexa features. Though the SDK is not currently publicly available, Amazon is providing select access to OEMs and Tier-1 suppliers. Panasonic was one of the first companies to commit to using the new interface at CES and demonstrated it as part of their Skip Gen IVI technology.

Architecture

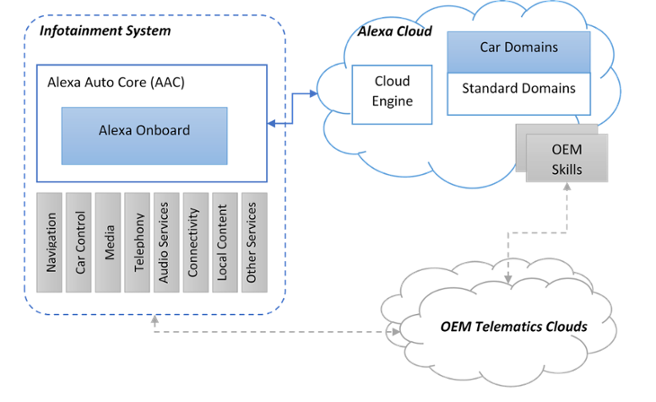

The diagram below outlines the architecture of AAC based on the CES conference, with some blocks generalized.

Alexa Auto Core supports multiple operating systems used in automotive environments, such as AGL, Android, Linux and QNX. It provides APIs to integrate with in-vehicle services such as navigation, climate control, local media, telephony, and more. This enables Alexa users to ask for status and request actions that are relevant to the in-car experience. AAC could also include Alexa Onboard, which is an on-board speech recognition engine that can be used if the vehicle doesn’t have internet connectivity.

If the vehicle does have internet connectivity, AAC communicates with the same Alexa Cloud and Alexa Voice Services (AVS) that are used by all other Alexa devices. In the cloud, the Alexa architecture can be broken into the three sub-systems shown in the diagram. All the speech data from the device is first processed by a Cloud Engine, which is responsible for understanding the spoken language and converting it to specific intents, i.e. the command that the user is requesting. The intent can be part of the predefined Alexa functions, called domains. For example, the user can be asking for a weather forecast, which will be directed to the weather domain. Multiple standard domains are used by in-home Alexa devices, such as music, shopping list, information, home automation, etc. As part of the automotive initiative, Amazon is adding car-specific domains to allow in-vehicle control. Examples include navigation, car control (climate control, window opening/closing, etc.), and traffic. This way, while in the vehicle, the user can ask for the weather, request to open the garage door, and set the temperature in the car or direct the navigation system to calculate a route. The commands are understood by the Alexa cloud (or Alexa Onboard if there is no internet connectivity) and then passed to AAC, which in turn instructs the local services to carry out the actual command in the vehicle.

As described in our previous blogs, Alexa also provides a mechanism to support custom voice commands that can be created by other vendors using the Skills SDK. Car manufacturers can create custom skills to control vehicles remotely (from home Alexa devices) or provide additional functions inside the vehicle that are not supported by Amazon’s car domains.

Alexa Onboard

Perhaps the most significant new announcement from Amazon is Alexa Onboard. This feature provides an onboard speech recognition engine, natural language processing and key domain logic, allowing the end-user to interact with Alexa without internet connectivity. In the Panasonic demonstration at CES, this feature was used to change the climate settings in the vehicle via voice with no connectivity. For this to work, the in-vehicle system needs to be powerful enough to handle sophisticated speech recognition and natural language processing.

Some features such as weather, music streaming, shopping list and home automation will not work without internet connectivity, but Amazon maintains that key car domain features will work.

Amazon mentioned that some custom skills can also be used in onboard mode. It wasn’t clear how this will be achieved, though one can assume it will be through a process of compiling custom skills and providing them as part of the AAC SDK.

Conclusion

This new announcement by Amazon clearly shows that the company has ambitions for Alexa that go beyond the home and into the car. A mobile Alexa device in the car is not enough to provide a rich user experience without deeper integration and the ability to control the car using voice. Up to now, that could only be achieved using custom Skills, but the new Alexa Automotive Core makes this integration easier, ultimately improving the end-user experience.

Alexa Onboard is another big step toward improving the user experience in the car. By enabling most Alexa commands to continue to work despite unreliable internet connectivity, Amazon is greatly expanding the potential for in-vehicle usage.

During the CES Alexa conference, Amazon speakers highlighted voice as the key to the future of user interface. In the case of vehicles especially, voice is the ideal interface to minimize driver distraction and improve safety. Car companies have been trying for years to enable useful voice services in the vehicle, but up to now nobody has succeeded in creating a good experience. The latest developments around Alexa and the emergence of cloud-based virtual assistants in general have the potential to create a true breakthrough, one that could see us all talking to our cars a lot more often in the future.